Introduction

Amazon S3 (Simple Storage Service) is a scalable, secure, and highly durable object storage service that Amazon Web Services (AWS) provides. It allows you to store and retrieve large amounts of data efficiently. One feature that makes Amazon S3 powerful is multipart upload, particularly useful when dealing with large files or when network interruptions or errors are common. This blog will explore multipart upload, its advantages, and how to implement it on Amazon S3.

What is Multipart Upload?

Multipart upload is a feature of Amazon S3 that enables the efficient and reliable uploading of large objects by breaking them into smaller parts. These smaller parts are uploaded in parallel and assembled to create the final thing. This approach offers several benefits:

Resumable Uploads: If an error occurs during the upload process, you can retry uploading only the failed parts instead of the entire file. This is crucial for large files and unreliable network connections.

Improved Throughput: Multipart uploads can significantly improve upload speeds as you can upload multiple parts simultaneously.

Optimal Memory Usage: Uploading large files as a single object might consume a lot of memory. Multipart uploads allow you to work with smaller parts, reducing the memory footprint.

Metadata Updates: You can set object metadata on individual parts, especially for custom metadata or permissions.

When to Use Multipart Upload?

Multipart upload is particularly useful in the following scenarios:

Large Files: When uploading files larger than 100 MB, Amazon S3 recommends multipart upload.

Unreliable Network ConnUnreliable Network Connections:ections: In situations where network interruptions are common, multipart upload minimizes the risk of data loss and allows you to retry uploading specific parts.

Streaming Data: For applications that require streaming data directly to Amazon S3, multipart upload can be a more efficient choice.

Custom Metadata: If you need to set specific metadata or access control settings for different parts of an object, multipart upload provides this flexibility.

Best Practices for Multipart Upload

Here are some best practices to follow when implementing multipart upload on Amazon S3:

Part Size: Choose an optimal part size based on your use case. Smaller parts are better for unreliable connections, while larger parts can improve upload efficiency.

Error Handling: Implement robust error handling, as failures can occur during any part of the multipart upload process.

Optimal Concurrency: Consider the number of parts to upload in parallel depending on your available resources. AWS SDKs often provide concurrency options to fine-tune this.

Monitoring and Logging: Use AWS CloudWatch and Amazon S3 access logs to monitor and log your multipart uploads for tracking and troubleshooting.

Lifecycle Policies: Implement lifecycle policies to manage incomplete multipart uploads, ensuring that you don't leave unfinished uploads consuming storage

How to Implement Multipart Upload on Amazon S3

Let's go through the steps to implement a multipart upload on Amazon S3:

Split the Video File: Split the video file using the

splitcommand into smaller parts.

Create an S3 Bucket: If you haven't already, create an S3 bucket where you want to upload the video parts. Replace

multipartupload-demo-octwith your desired bucket name. Remember that S3 bucket names must be globally unique.aws s3api create-bucket --bucket multipartupload-demo-octInitiate a Multipart Upload: Initiate the multipart upload for the video file. You will receive an upload-id, which you will need for subsequent steps.

aws s3api create-multipart-upload --bucket your-unique-bucket-name --key yourfilename

This command will return an upload-id like

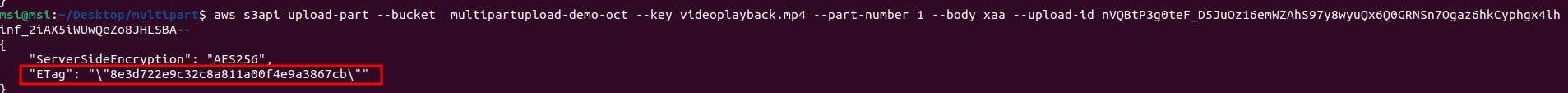

nVQBtP3g0teF_D5JuOz16emWZAhS97y8wyuQx6Q0GRNSn7Ogaz6hkCyphgx4lhinf_2iAX5iWUwQeZo8JHLSBA--Upload the Parts: Now, you'll upload the two video parts,

xaaandxab, as individual parts of Amazon S3. You must specify the part number and provide the UploadId obtained in the previous step.(I) Upload the first part (

xaa):This will return an ETag for the uploaded part, something like."

9d15c7757230b2f88a47906bdc254c07"

aws s3api upload-part --bucket DOC-EXAMPLE-BUCKET --key large_test_file --part-number 1 --body large_test_file.001 --upload-id

(II) Upload the second part (xab):

This will also return an ETag for the second part, something like "b104d08a3208c1b16ce4dbccca9d8d34".

aws s3api upload-part --bucket your-unique-bucket-name --key videoplayback.mp4 --part-number 2 --body xab --upload-id nVQBtP3g0teF_D5JuOz16emWZAhS97y8wyuQx6Q0GRNSn7Ogaz6hkCyphgx4lhinf_2iAX5iWUwQeZo8JHLSBA--

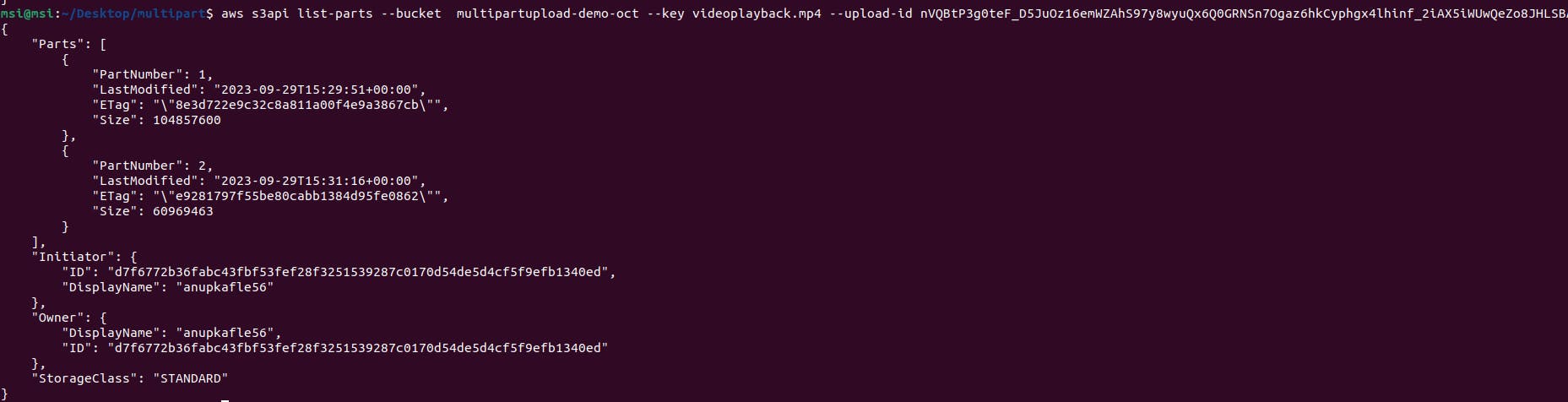

List Etag: you can use the

aws s3api list-partscommand to list the ETags for the multipart upload parts. Here's the command you can use:aws s3api list-parts --bucket your-unique-bucket-name --key videoplayback.mp4 --upload-id nVQBtP3g0teF_D5JuOz16emWZAhS97y8wyuQx6Q0GRNSn7Ogaz6hkCyphgx4lhinf_2iAX5iWUwQeZo8JHLSBA--

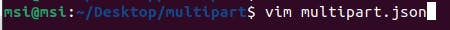

Create a JSON file and keep the details in the JSON file

Complete the Multipart Upload: After uploading all parts, you must request Amazon S3 to complete the multipart upload. Use the upload-id and a list of ETags (ETags from the two parts) to indicate which parts belong to this upload.

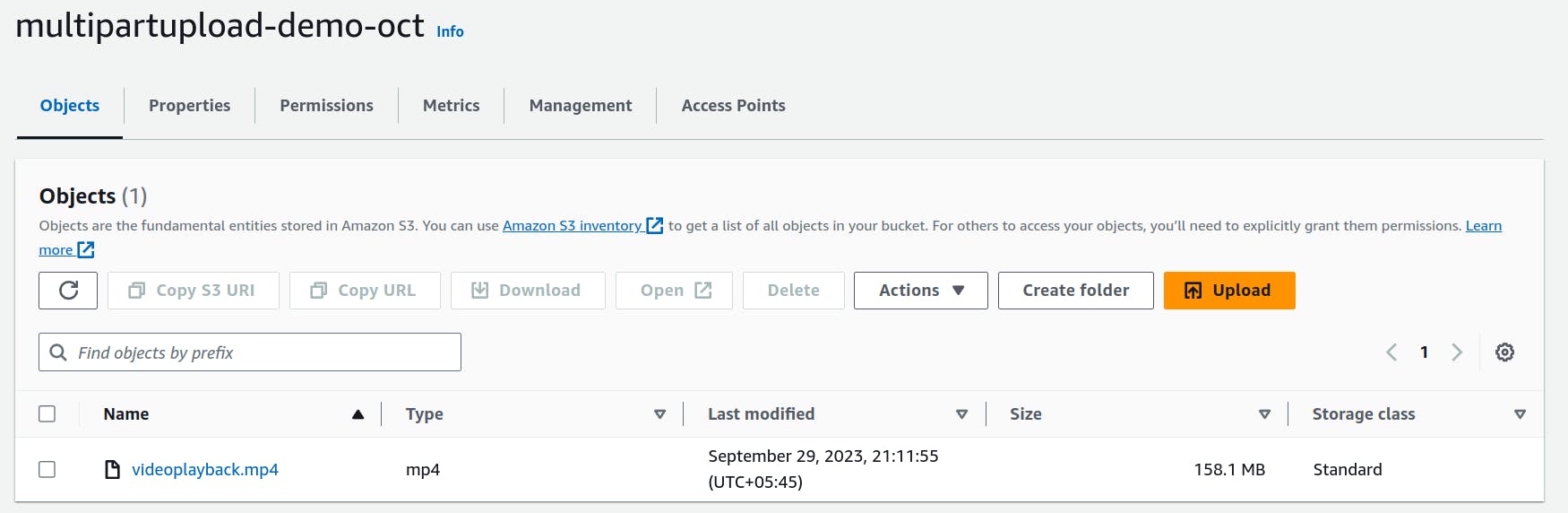

aws s3api complete-multipart-upload --multipart-upload file://multipart.json --bucket multipartupload-demo-oct --key videoplayback.mp4 --upload-id nVQBtP3g0teF_D5JuOz16emWZAhS97y8wyuQx6Q0GRNSn7Ogaz6hkCyphgx4lhinf_2iAX5iWUwQeZo8JHLSBA--This command will complete the upload, and your video file, videoplayback.mp4, will be available in your S3 bucket.

That's it! You've successfully performed a multipart upload for your video file using the AWS CLI. This approach ensures the efficient and reliable transfer of extensive data to Amazon S3, even when network interruptions occur.

Conclusion:

Multipart upload on Amazon S3 is valuable for anyone working with large files, ensuring a reliable, efficient, and flexible data transfer process. By understanding the concept and following best practices, you can harness the full power of multipart upload, ensuring the secure storage of your data in the cloud, even under challenging circumstances.